- AI with ALLIE

- Posts

- My 2026 AI predictions (and the three things you NEED to focus on)

My 2026 AI predictions (and the three things you NEED to focus on)

The next 12 months in AI will feel like 5 years — here's your roadmap.

AI with ALLIE

The professional’s guide to quick AI bites for your personal life, work life, and beyond.

Copy this entire newsletter and throw it into NotebookLM or ChatGPT or Claude, add in your 2026 AI strategy and say “based on these 2026 AI predictions from Fortune 500 AI Advisor Allie K. Miller, is my 2026 AI strategy on track? How is it on track? How is it off track? Detail both sides, pull sources as needed, rank priorities from most important to least, as well as extremely tactical suggestions to improve our 2026 planning based on AI-first best practices.”

My 2026 AI Predictions

I've been writing AI predictions for the last eight or so years. And this year felt the most strange and sci-fi. Not quite head floating in a jar of jelly sci-fi, but things I could have only imagined a decade ago feel a few model releases away. As I caveat every year:

Just because I predict it, doesn’t mean it will come true.

Just because I predict it, doesn’t mean I want it to come true.

This prediction exercise matters most for forcing clarity about where we're headed—and what to do about it. As with all my content, I will aim to be as clear and actionable as possible, lest we all become floating heads in jelly.

(If you want to see how last year's predictions held up, you can view them here.)

How I create my predictions

First, I started in AI almost 20 years ago. Because of that, I have the ‘insider view’ for the pace of change and especially, the change in velocity over time.

Second, my head is a pattern beast. I don’t know how to explain it. I’ll hear a sentence in October that reminds me of an image I saw in March which reminds me of a documentary from 2020 which will remind me of a facial expression I saw Jensen Huang give once.

Third, leading up to this newsletter, I had lively debates with friends and executives at AI unicorns, as well as folks at leading AI labs like OpenAI and Anthropic. And more and more, the debates feel less about whether something will happen or not, which was the case 15 years ago in my tech conversations, and much more about what will happen when it does.

The top three themes of 2026

I could stand on a podium and shout that AI will get better or faster or cheaper or smarter, but I don’t find that helpful for navigating the year ahead and finding opportunities for leverage. So instead, let me tell you the three big themes I’ll be keeping my eyes on this year. These are the three ideas I'd focus on if you're feeling overwhelmed by everything below with more details in the full newsletter.

Context Engineering and Memory. The race to help AI remember you, your work, and your preferences in new and interesting ways to better intuit intent.

Autonomy and Agency. AI that doesn't just respond in a chat but takes action everywhere. More Claude Code, more proactive AI, more AI as a teammate, less human constantly in the loop, more world models, possible continual learning.

Economic Value. We are moving from asking "Is AI smart?" to asking "Is AI valuable?" The benchmarks are shifting from academic ones to economic ones—and so is the divide between those who use AI well and those who don't.

2026 AI PREDICTIONS SUMMARY

Claude Code escapes the terminal and comes to every industry

AI will start prompting you

AI as a Teammate, not just a Microtasker

Context and memory scale like crazy

Voice AI explodes

New multi-agent interfaces emerge

World models break through

The beginning of continual learning

Economic value replaces academic benchmarks

The superuser vs surface user divide widens

+ 10 additional hot takes

+ 5 actions to take today

Claude Code is coming to every industry and represents the battle for the Everything Machine

Agents, agents, agents. There is zero question in my mind that Claude Code was the breakout AI product of 2025. What started as a side project at Anthropic in September 2024 became the jewel of developers everywhere. People used it to control their ovens and their lights. It’s been hailed by many AI X users, including Anthropic employees, as AGI, but most people have never heard of it. Claude Code was the first AI system where I stopped chunking out tasks (giving AI a little bit at a time) and started giving it multiple things to do at once. Claude Code with Opus 4.5 (my favorite model right now) would reliably break them down, execute them in order, and verify its own work. And verification (maybe through reinforcement learning) is going to come up again and again this year. As a result, my checkins with Claude were less frequent and less hands-on. I felt like Claude immediately understood my intent, sometimes better than I could communicate. A friend described this as the shift from semantic search to agentic search—the AI wasn't just finding information, it was taking action.

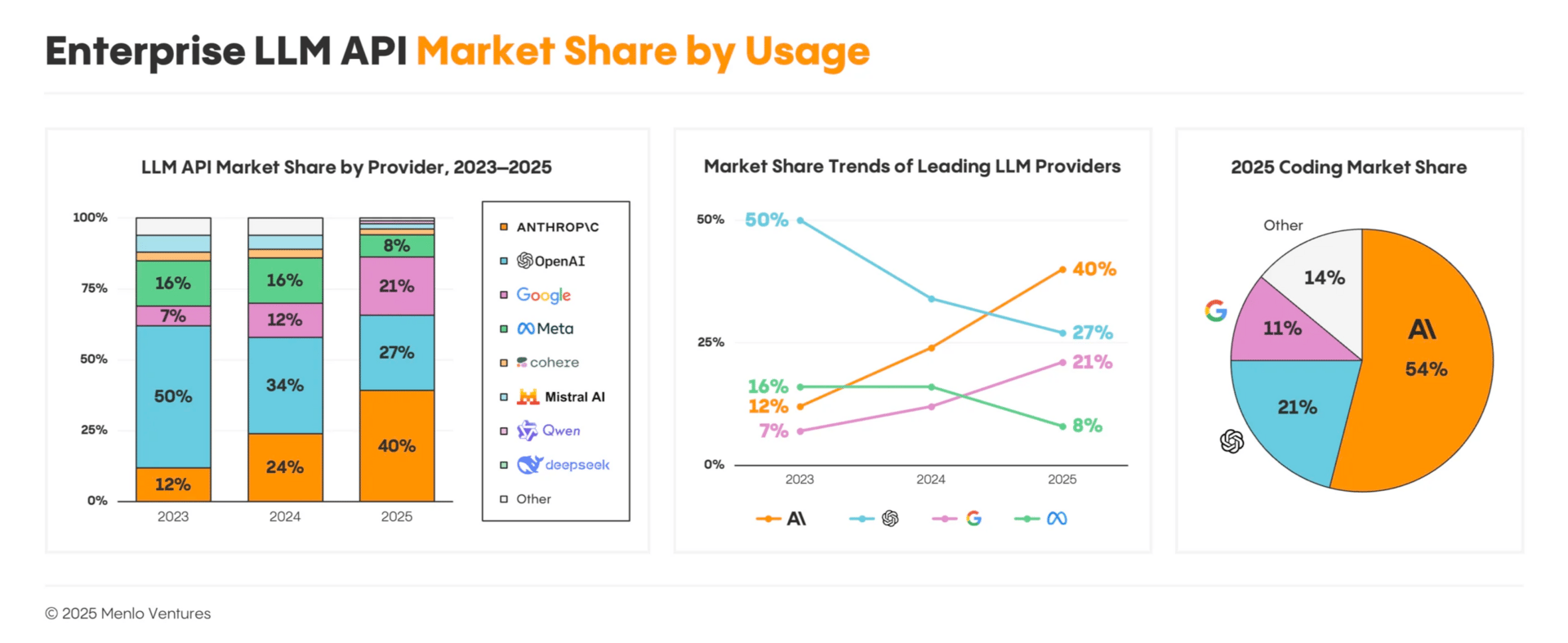

Menlo Ventures market share map - Anthropic enterprise API share at 40%, up from 12% in 2023 and 24% in 2024; OpenAI only at 21% share of coding market

But if it was so great, why have most people never heard of it? Or even used it? Well, it lives in a terminal. It’s a command line interface (CLI) tool. And, I’m sorry to the Anthropic team, but it’s ugly for non-developers to use. That made adoption heavy among developers but nearly invisible to everyone else. According to Menlo Ventures, Anthropic's enterprise market share went from 24% to 40% over the past year, and they now hold 54% of the AI coding market. But according to Searchlight Institute, 81% of Americans surveyed had never heard of Anthropic. I believe Claude Code's power is too general-purpose to stay locked in a developer tool.

OpenAI, Google, and Anthropic are all on a mission - whether they say it explicitly or not - to build the ‘everything machine’. The one system you lean on to get everything done. The single interface (similar to WeChat for web in China). Claude Code feels the closest thing to the Everything Machine that I’ve ever seen. The question every AI company will face this year: Are you the interface, or are you the connector? A lot of business models depend on getting this right.

I predict that Claude Code will expand beyond the terminal in 2026 and come to every industry. And when it does, I suspect they'll rename it, because mass adoption outside engineering will not adopt something with the word "Code." We could also see Claude getting more features in image/video/vision/spatial realizing how important it is for complex RL environments, gaming, and world models. Maybe through partnership or acquisition.

You Will No Longer Prompt AI. AI Will Prompt You.

I’ve been waiting for the death of the single prompt chat thread and have been making demo after demo of how to get AI to proactively work for you so that you’re not the bottleneck. Well, dear friends, we are entering the era of proactive AI. These systems will sit in your Zooms, your Slacks, your Google Drives, ambiently gathering context and starting to intuit what you need before you ask. AI will prompt you. And you will review, comment, edit, and approve.

We saw a tiny glimpse of this proactive AI world with ChatGPT Pulse, the proactive AI helper from OpenAI for the $200/mo plans. But I’m predicting something more agentic. As Sam Altman said on the Big Technology Podcast this month, AI will be "figuring out when to bother you, when not to bother you, and what decisions it can handle and when it needs to ask you."

And this is really where context engineering comes into play. What should an AI be listening to, reading, or watching in order to best understand how your team or company or family works? Does it have access to what it needs to do that? Are you keeping a decision log of everything your business has decided in the past and whether it went well or not? I suspect many companies will be at crossroads this year realizing that AI will need access to more and more offline data (which…yeah, can be creepy to gather.)

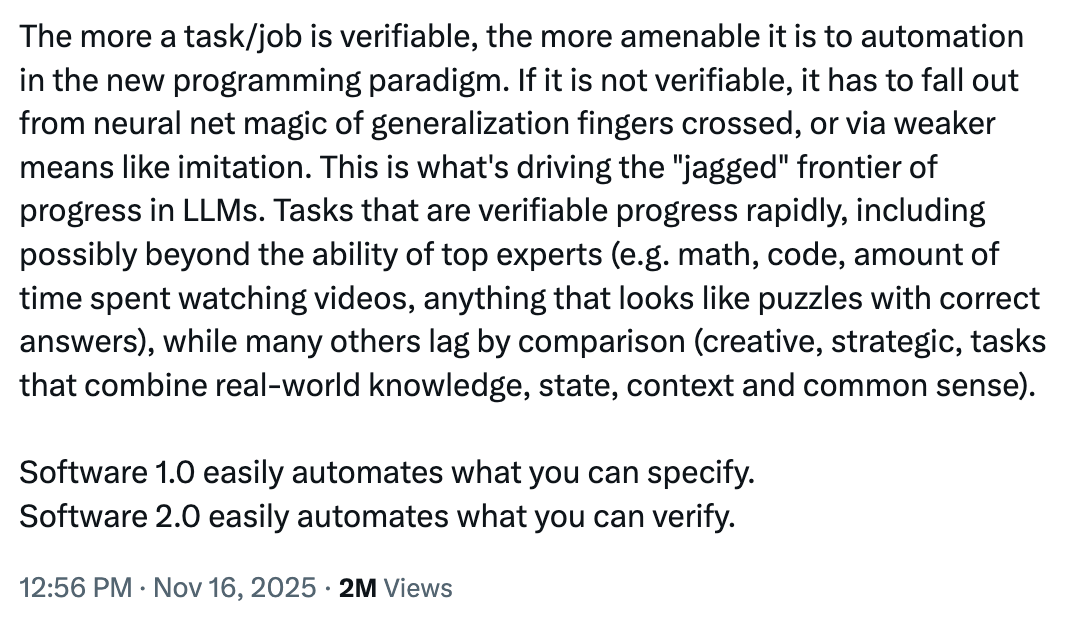

Excerpt from Andrej Karpathy tweet - “Software 2.0 easily automates what you can verify”

The 9-to-5 workday, in practice, may stop existing because agents will be proactively working for you even when you're asleep or over the weekend. Multi-agent systems will ping each other, kick off jobs, and complete tasks in the background while you live your life. I genuinely don’t think we will see that large of a shift in work hours or compensation structure because of this, but I think folks will start to realize that work hours will be less about when work gets done and more about when people at the company overlap for synchronous discussions, collaborations, and approvals.

I predict that proactive AI will explode and your job with AI will start to shift from prompting to verifying. The skill becomes knowing whether to approve or reject what AI has already done.

AI as a Teammate, not just a Microtasker or Delegate

OpenAI already has Codex living in their Slack. Engineers treat it like a coworker, not a tool. They assign it tasks. It delivers. The team that built the Sora Android app did it in 28 days with AI as a core team member. 90% of the code in Claude Code was written by Claude Code itself. And in the last 30 days, 100% of Boris Cherny’s contributions to Claude Code have been written by Claude Code. This is the shift from individual-level AI to system-level AI. Instead of "I use AI for my work," it becomes "AI is part of how our organization works."

Codex lives in OpenAI’s Slack and functions as a teammate

Example: Review all territory sales calls automatically. AI creates dynamic training content for sellers. AI flags at-risk accounts before humans notice. AI initiates and supports the whole team. I predict that the most forward organizations will treat AI teammates the way they treat human teammates: with context about goals, access to systems, authority to act, and clear boundaries. The "always do / ask first / never do" protocols we’re giving to agents today will become as standard as job descriptions.

Context and Memory Management Scale like Crazy

Context engineering is replacing prompting. It’s all about giving AI the right information, at the right time, in the right way.

Memory was one of the greatest releases in 2025, as I’ve mentioned before. ChatGPT remembers your preferences. Claude has super search and projects. NotebookLM organizes all your sources. These aren't mini prompt improvements, they're the start of context systems (or as I call it, and what I named my company because of it, the Open Machine).

The Claude web app and mobile app cap out on conversation length too quickly to get complex work tasks done in one chat. In 2026, I’m praying we see context length expand dramatically - at minimum 2x, possibly 10x to 100x. Gemini 3 Pro is still my go-to when I know context length is my biggest concern.

I’m also 50/50 on whether we see more portable memory this year. Could be something like an OpenAI login. Your AI context will follow you like your Apple ID does today. Same knowledge, same preferences, across every device and app. We already technically have this when you log in with your ChatGPT account on ChatGPT Atlas, but I could see it expanding.

And I’m 10/90 on whether we see the beginning of merged memory this year. The idea that I could take the bucket of “Allie Context” and merge it with my sister’s context and ask “the two of us” a question or complete tasks. Think about shared memory pools, collaborative context, or multi-user personalization. The tech pieces of the puzzle are emerging (ex: persistent memory, shared conversations), but merging contexts across users raises some tricky questions around consent, privacy, what happens when contexts conflict, whose "version" of shared events is canonical, etc.

I predict that gathering and managing context becomes even more important, context length restrictions become a little less annoying, and memory will get at least one big upgrade.

Voice AI explodes

I’m a voice AI superuser. 40-minute AI Walks have become my most productive writing time (I dictate to Otter AI and input that transcript into GPT-5.2 Thinking or Claude Opus 4.5 Thinking). I've built full apps with dictation while lifting weights. I have Wispr Flow on my desktop so I can dictate anything by just holding down the function key. The productivity gains from dictation are real and massive.

Voice AI will explode in 2026. But I still don't expect everyone talking to their computers in open offices. The average person, based on my comments section, is deeply uncomfortable talking to AI out loud in public spaces. I get it. It’s weird. And most people don’t even talk to Siri even though we’ve had that for over a decade. Over 100M people talk to Alexa, but that’s in the comfort of their own home. When I’m on my Claude Walks, I find myself faking the tone of what I’m saying to make it sound like I’m talking to a friend (“and theeeeen maaaaybe we could try this?”) and not to AI (“please add 3 bullets of decreasing specificity”) just to blend in.

Introverts, are you a voice-first user? If so, can you share your workflow with me? Do you talk to AI in public?

Alexander Embiricos from OpenAI said it perfectly on Lenny's Podcast this month: "Current underappreciated limiting factor to AGI is human typing speed." Let that sink in. We are literally being held back by our fingers.

I predict that voice AI will explode. I’d love to see a voice-first Claude Code-esque experience. We may see infrastructure shifts in the office (ie more phone booths, more microphones for every employee, more dictation tech procurement), but I sadly believe that in 2026, voice will largely be used by superusers and not mass behavior.

We may get new multi-agent interfaces

I spoke with a friend Parth Patil (he works for Reid Hoffman and experiments more in AI than most employees at AI labs) who showed me how he multi-tasks with AI. And when I say multi-task, I don’t mean in the mere mortal way where you both shop on Amazon and also read a Wall Street Journal article. No, I mean he’s running 10-40 concurrent AI tasks. He even used AI to build out his own interface to better manage it all. He and I spoke at length this year about browsers, VR, and the need for a new interface for multi-threaded work with agents.

I predict that if we get more proactive AI as mentioned above, or more complex environments, or AI that is functioning as a deeply embedded teammate, we need something more visual, more dynamic, less boxed in. And yes, obviously we all just want the Minority Report setup.

I loathe typing and am counting down the years until I can do this.

World models and new architectures emerge as potential breakthrough paths

Raquel Urtasun's Waabi is using world models to train autonomous trucks. Fei-Fei Li's World Labs is betting that spatial intelligence is the missing piece. And as Jensen Huang put it: "The ChatGPT moment for robotics is coming. Like large language models, world foundation models are fundamental to advancing robot and AV development."

World models are AI systems that don't just read and generate text but aim to understand and simulate physical environments. Think more Ender’s Game and less chatbot. Google DeepMind's Genie 3, for example, showed you could get an entire navigable 3D world based on just one prompt. TIME named it one of the best inventions of 2025.

World models matter because they unlock multimodal understanding, embodiment for robotics, and large-scale predictions that text-only models can't do. They want to predict how actions affect environments. You can see this ‘world model’ language peppered into job postings at the big AI labs as well. And for what it’s worth, I thought we would have seen more in this space in 2025.

I predict that the race to generalization continues, with world models and new architectures emerging as potential breakthrough paths. Anthropic is the only top AI lab that has not yet meaningfully cracked into this space (aside from Claude Artifacts that render with code, or Skills like frontend design or PowerPoint), so I suspect they’ll make a move. We may see complex RL environments get a new interface as well. We may even see the first commercially available world model in 2026 (Genie 3 is still research access only), and I think we will see new ways that AI models infer and act on our preferences (think: value functions, reward functions).

The Beginning of Continual Learning

In Fall 2024, I was already predicting we'd see early signs of continual learning in 2026 aka AI that learns on the job the way humans do. Then Sholto Douglas, an Anthropic researcher formerly at DeepMind, confirmed it on the No Priors podcast this month: he believes continual learning gets solved "in a satisfying way" this year. Honestly, that’s a much bolder prediction than I was originally going to make this year. I’ll take the under and say we see meaningful progress toward continual learning, but not a full solution. Still, if Sholto is right, this changes everything about how we think about AI development.

I predict that we will see new research that changes our assumptions about LLM scaling—not a new architecture, but breakthroughs in systems and orchestration that make these models more efficient.

The performance and productivity conversations shift to economic value

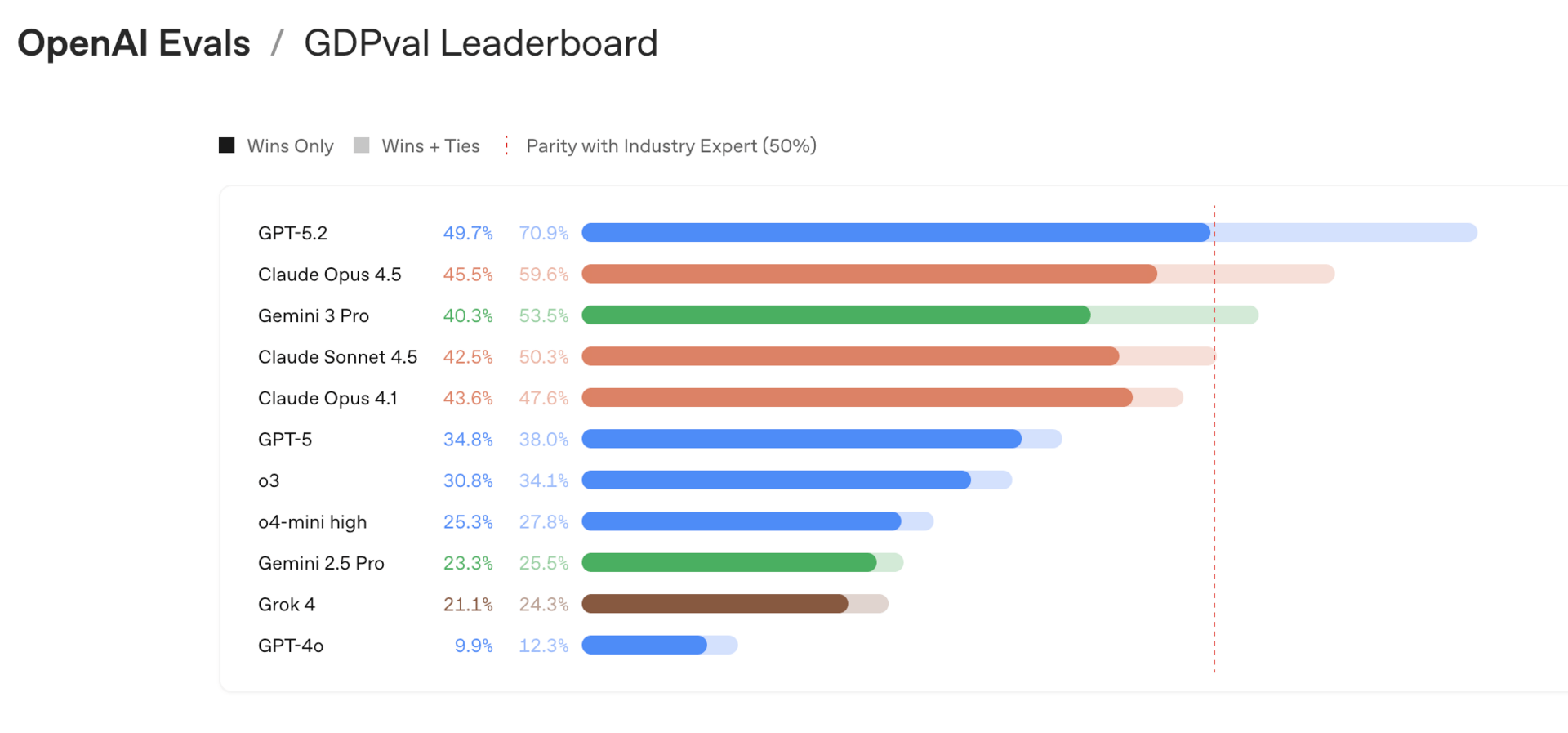

Anyone notice the benchmark announcements from Google and Anthropic toward the end of this year? We are shifting from academic benchmarks to economic ones. One example is GDPval where they test 1320 tasks across 44 high GDP-contributing jobs. GPT-5.2 Thinking scored a 70.9%, the highest score ever.

OpenAI's GDPval, the Remote Labor Index, Vending Bench 2—these measure whether AI can do work that makes money, not whether it can pass a PhD exam. Even the famous METR study is measuring how long of a human work task an AI can take on, and we will likely see AI handling an 8+ hour task at 80% reliability this year.

We will no longer wonder if AI is smart. We will wonder if it's valuable.

I predict that 2026 brings more economic benchmarks and a noticeable shift in tone. The polite "AI augments humans" framing will sound a bit more blunt in 2026, and we’ll see more conversations about which roles or tasks are at risk.

The user divide grows between superusers, surface users, and non-users

If you attended my AI-First Conference in August and listened to my final goodbye speech, you know that this has been on my mind for awhile. And is one of the most worrying.

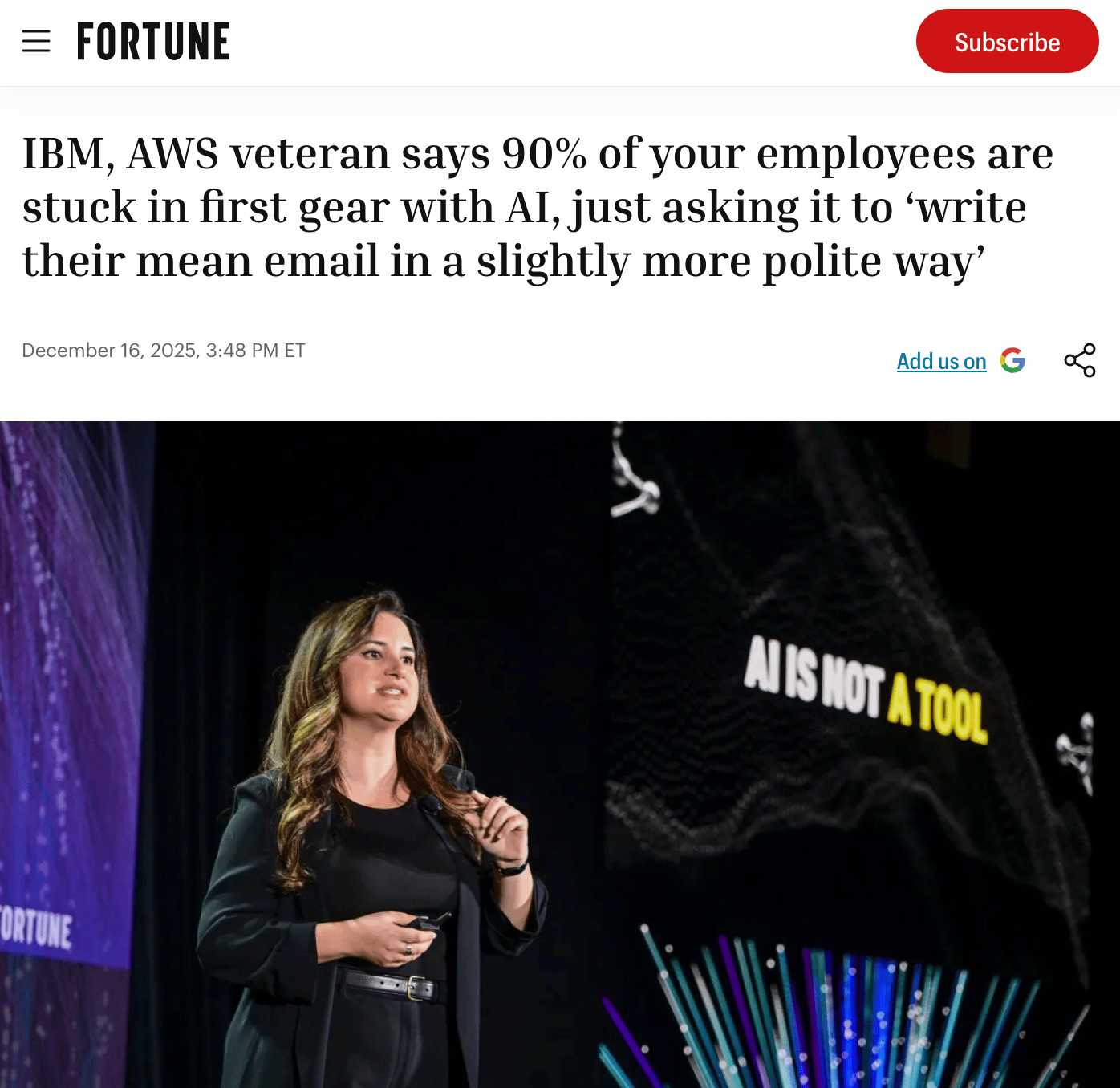

Jack Clark, co-founder of Anthropic, wrote this month: "By the summer, I expect that many people who work with frontier AI systems will feel as though they live in a parallel world to people who don't."

I've been watching this divide form. There are now three groups: true AI super users, surface users who use AI frequently and believe they're super users but have put an artificial ceiling on their usage type and style, and people who barely use it at all. There are too many companies measuring AI licenses and adoption as their main metric when it should be considered a helpful input metric. You can have 40,000 employees all using AI daily, but as I said at the Fortune Summit, if they’re just using it to rewrite emails, you’re wasting millions of dollars.

I predict that 2026 will be the first true year where we feel the economic gap between group 1 (superusers) and everyone else. If you are in group 2 (surface users), you need to move into group 1. Move from prompting to context, move from chat to agents, move from single-threaded to multi-threaded, move from execution to systems thinking, move from reactive to proactive, and move from vanity metrics to value.

Additional Hot Takes for 2026 🔥

Unlike the above predictions, which draw on years of watching how AI actually plays out inside organizations, this next section is pure speculation. Me throwing darts at a wall. Take these with far less certainty. Think of this more as fodder for your next tech group dinner or a fun debate with me at my next visit to your company.

Data center backlash goes mainstream. Data Center Watch said $64 billion in projects are already blocked or delayed. Michigan had a data center protest just a few weeks ago. And a Heatmap poll of almost 4,000 American voters said that only 44% of them would welcome a data center in their community. The infrastructure AI needs will face growing resistance, similar to the HQ2 resistance when Amazon tried to build in New York.

Gemini takes share from Microsoft Copilot. Google is catching up fast with models that integrate seamlessly into existing Google Workspace workflows (also YouTube now commands 13.9% of all U.S. TV viewing - more than any streaming service, cable network, or broadcast channel - and that video data advantage translates into AI training). Enterprise customers will increasingly question the Microsoft premium and pivot to ChatGPT, Claude, or Gemini.

Still no 1-person $1B company with real legs. We'll hear the hype. We'll see the claims. Sam has been talking about it for at least two years now (and recently started talking about a 0-person fully autonomous enterprise). But I think a sustainable billion-dollar business will still require more than one person with AI tools in 2026.

Neo robot delayed (again). I’m less bullish on humanoid robots than most. The Neo robot won't ship in 2026. Expect a delay to 2027. We'll see demos. We won't see mass deployment.

AI companionship lawsuit. There will be a major lawsuit between an AI toy company and consumers. I don’t trust the direction this space is headed and would not buy an AI toy for a child today. Too many stories of loose guardrails.

China dominates open source. Chinese models went from 1.2% to 30% of global open source share in one year (yes - you read that right). Qwen has 100,000+ derivatives on Hugging Face. Sam Altman admitted early 2025 that OpenAI was on the "wrong side of history" regarding open source. The implications for AI geopolitics are massive. Tensions rise, and the US makes a serious push into open source AI after realizing how important it is for global adoption. By EOY, China will still be in the lead.

Gen Z workforce impact. Gen Z unemployment already sits at 10.8% for ages 16-24, compared to 4.3% overall (Bureau of Labor Statistics, August 2025). Oxford Economics estimates this costs $12 billion per year, with more young people living at home than any time in decades. Job loss from AI will hit Gen Z hardest. Many will exit the corporate workforce entirely and move into freelance, entrepreneurship, and the gig economy.

OpenAI will release vertical AI apps or agents. I remember several vertical partnerships announced in 2025. ChatGPT’s biggest stories are in health. Microsoft Copilot’s number one use case of the year was health. This is a gamble guess considering everything I said about the Everything Machine. Maybe we’ll see one in the health or mental health space.

AI companionship stigma fades. Loneliness is an epidemic. AI companions are getting better. The social judgment around AI relationships will soften, especially as mainstream apps add relationship-like features.

Collaborative AI memory emerges. Sora already lets users remix each other's creations. The next frontier: shared AI memory. Teams, couples, organizations with merged AI context. As mentioned in the context section, we may see "merge memory with collaborators" features in major platforms by end of 2026.

Non-techies join the model welfare discussion. This Amanda Askell interview was one of my favorite videos the whole year. She’s a philosopher at Anthropic who works on Anthropic’s personality and character. I think non-techies are going to start to ask about model welfare and how we care for AI models as compared to humans.

Preparing for 2026: Leverage, Leverage, Leverage

The gap between AI leaders and laggards will widen dramatically. Here's how to stay on the right side:

Build context systems, not prompt libraries. Your competitive advantage is organizational knowledge that AI can access and act on.

Implement agent protocols now. Define what AI can do autonomously before you need to.

Measure economic impact, not just usage. "We have 10,000 ChatGPT users at our company" means little. "AI reduced customer response time by 40%" or “we spun up a new business line in 4 months instead of 12” means everything.

Train on evals, not just tools. The skill gap isn't knowing which AI to use. It's knowing how to maximize AI’s superpowers and how to determine whether the output is good.

Prepare for the platform wars. Your favorite SaaS tool might become an AI feature. Your workflows might consolidate into a single AI interface. Build flexibility now.

2026 will separate people and organizations into “actual superusers”, “surface users”, and “non-users”. If you are looking to get ahead and stay ahead in AI (which I assume you are, considering you are subscribed to a newsletter that literally helps people do that), you need to do everything in your power this year to go from a surface user to an actual superuser.

The question isn't whether you'll use AI. There are over a billion weekly active users of AI. It’ll come down to if you use it well enough to matter.

Here's to a transformative 2026. Or at least a weird one. I’ll see you in the future.

Stay curious, stay informed,

Allie

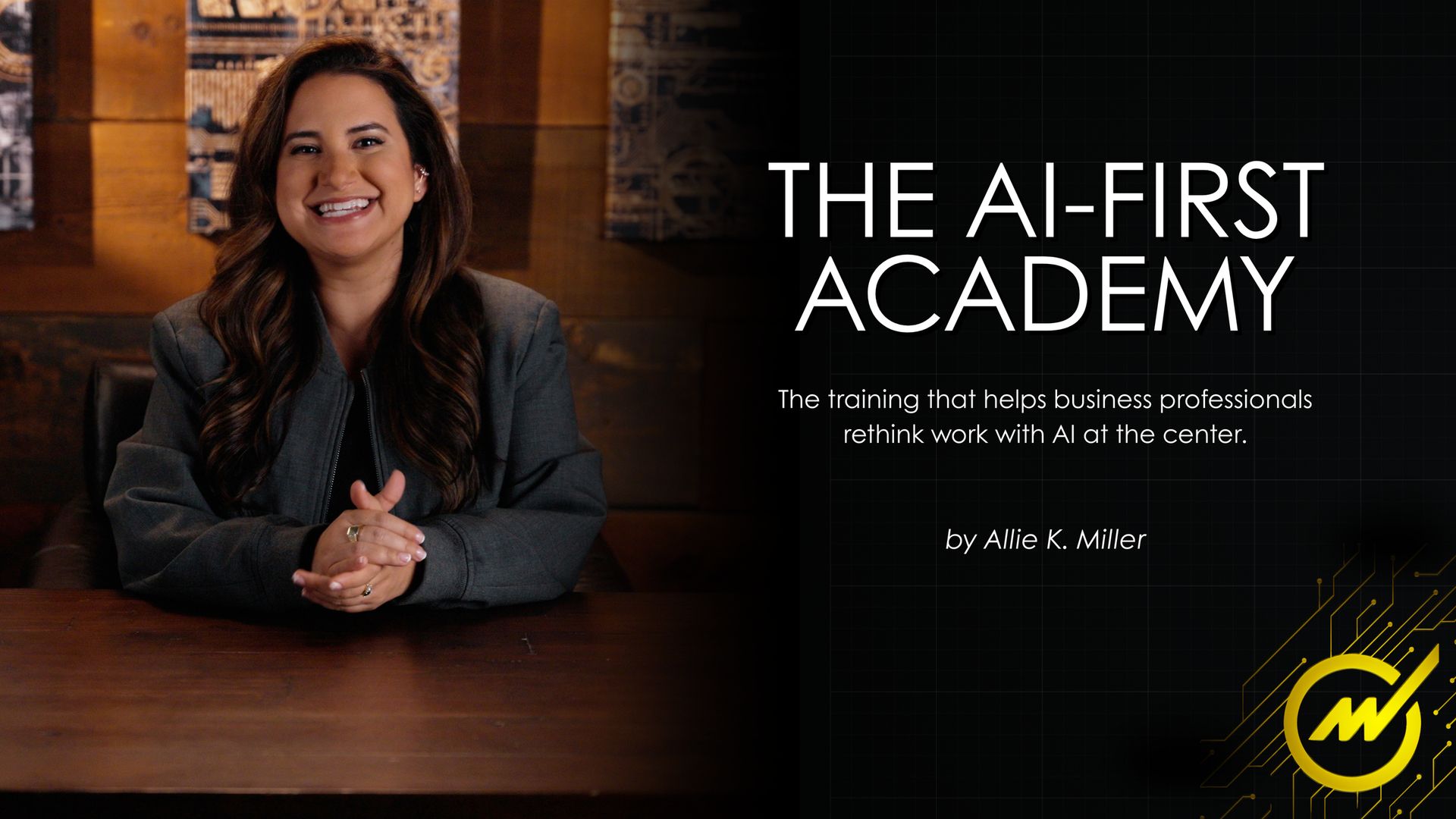

Inside AI-First Academy, you'll learn how to rebuild you work from the ground up. We cover the full progression: from AI Basics to custom assistants to full workflow automation. This is the same training other professionals at Amazon, Apple, and Salesforce are using to stop dabbling and start building.

You'll learn how to:

✔ Write prompts that produce usable, business-ready outputs (not generic fluff)

✔ Build custom GPTs and reusable AI systems that handle your recurring tasks

✔ Automate research, reports, meeting synthesis, and multi-step workflows

✔ Prototype real tools and dashboards - no code required

✔ Deploy AI agents that work for you, not just with you

✔ Navigate the best of Claude, ChatGPT, and the latest agentic features with instant access to our 90-minute Agentic AI Workshop

(Want to upskill your whole team? Get a company-wide license)

Feedback is a Gift

I would love to know what you thought of this newsletter and any feedback you have for me. Do you have a favorite part? Wish I would change something? Felt confused? Please reply and share your thoughts or just take the poll below so I can continue to improve and deliver value for you all.

What did you think of this month's newsletter? |

Learn more AI through Allie’s socials