- AI with ALLIE

- Posts

- The Entire 2025 AI Year in Review

The Entire 2025 AI Year in Review

What Every Business Leader and Solopreneur Actually Needs to Know

AI with ALLIE

The professional’s guide to quick AI bites for your personal life, work life, and beyond.

I know what your inbox looks like right now. Every vendor, every analyst, every consultant wants to tell you about AI. You're outwardly positive on the technology and internally terrified that you'll be the leader who gets it wrong. You don't need 100 more things to do or tools to try (but that won’t stop me from naming a few in this newsletter). You need to understand what actually happened this year, what it means, and what to do about it. Here's my attempt to give you exactly that.

Please copy this entire newsletter and throw it into NotebookLM or ChatGPT or Claude, add in your 2026 AI strategy and say “based on the key happenings in AI in 2025 according to Allie K. Miller, is my 2026 AI strategy on track? How is it on track? How is it off track? Detail both sides, pull sources as needed, rank priorities from most important to least, as well as extremely tactical suggestions to improve our 2026 planning.”

Reflecting on my 2025 Predictions and What Actually Happened

One year ago, I said the three biggest prediction themes were: (1) test-time AI and long horizon reasoning, (2) AI agents, and (3) energy and compute. I feel like I nailed it.

Feel free to look back on my 2025 predictions from a year ago to see how they turned out, and let’s take a look back together on the actual 2025 we lived, the most advanced year in AI yet.

TL;DR

I wrote 5000 words. Whoops. Here’s the quick headline version.

Models Feel AGI-ish and Big Features Get Copied in Weeks, Not Years

A Few Tools Completely Changed the Way We Work: Claude Code, Wispr, Nano Banana Pro, NotebookLM

Context Engineering Takes Center Stage and AI Starts to Get More Proactive

Data Centers Were the Single Largest Driver of American Economic Growth

AI Dollars Are Still Mostly Going to Hardware and Not Transformation

AI Labs Grow in Revenue, but the Business Model Is "Spend Now, Reap Later"

95% of Enterprise AI Pilots Supposedly Delivered Zero Measurable P&L Impact

Gen Z Doesn't Google Anymore, They Ask AI

Can AI Do Your Job? Increasingly, Though Sparingly, It Can

72% of Teens Have Used an AI Companion and 28% of Adults Have Had Romantic Relationships With AI

AI That Remembers You Is Fundamentally Different From AI That Does Not

The Top AI Talent Now Commands Pro Athlete Compensation

The AI Device Revolution Hasn't Happened Yet

Models Feel AGI-ish and Big Features Get Copied in Weeks, Not Years

Has everyone seen the viral tweets saying Claude Code + Claude Opus 4.5 = AGI?

Two years ago, everyone thought OpenAI was untouchable. (And actually 10 years ago, people thought Google DeepMind was untouchable too.) January 20th shook that up. DeepSeek released their new model R1 with visible thinking steps, that little chain-of-thought reasoning (“The user is asking about x, I will try y”) exposed directly to users. They claimed less than $6 million in training costs for the base model (though the CEO of Anthropic estimated their total investment at closer to $1 billion). The DeepSeek app hit 16 million downloads in 18 days, surpassing ChatGPT's launch numbers (9 million in the same time frame).

OpenAI and Anthropic copied the visible reasoning feature within weeks. Not years. Not months. Weeks.

This pattern repeated throughout the year. Google launched Gemini 3 in November with a single-day rollout across their entire ecosystem. It outperformed GPT-5.1 on several benchmarks. Marc Benioff publicly switched to Gemini and said "I'm not going back."

Two weeks later, Sam Altman declared "code red" internally (and I was quoted in Fortune on it here), warning of "temporary economic headwinds" and "rough vibes."

Claude Opus 4.5 hit 80.9% on SWE-bench Verified—the first model over 80% on real-world SWE tasks. And then GPT-5.2 hit 70.9% on GDPVal (by comparison, GPT-5 was at 38.8%). The pricing on Claude Opus 4.5 is now competitive enough that Anthropic is telling developers it "can be your go-to model for most tasks." And who knows, maybe this speed will become days, not weeks. OpenAI, for example, used Codex to build the entire Sora Android app in only 28 days.

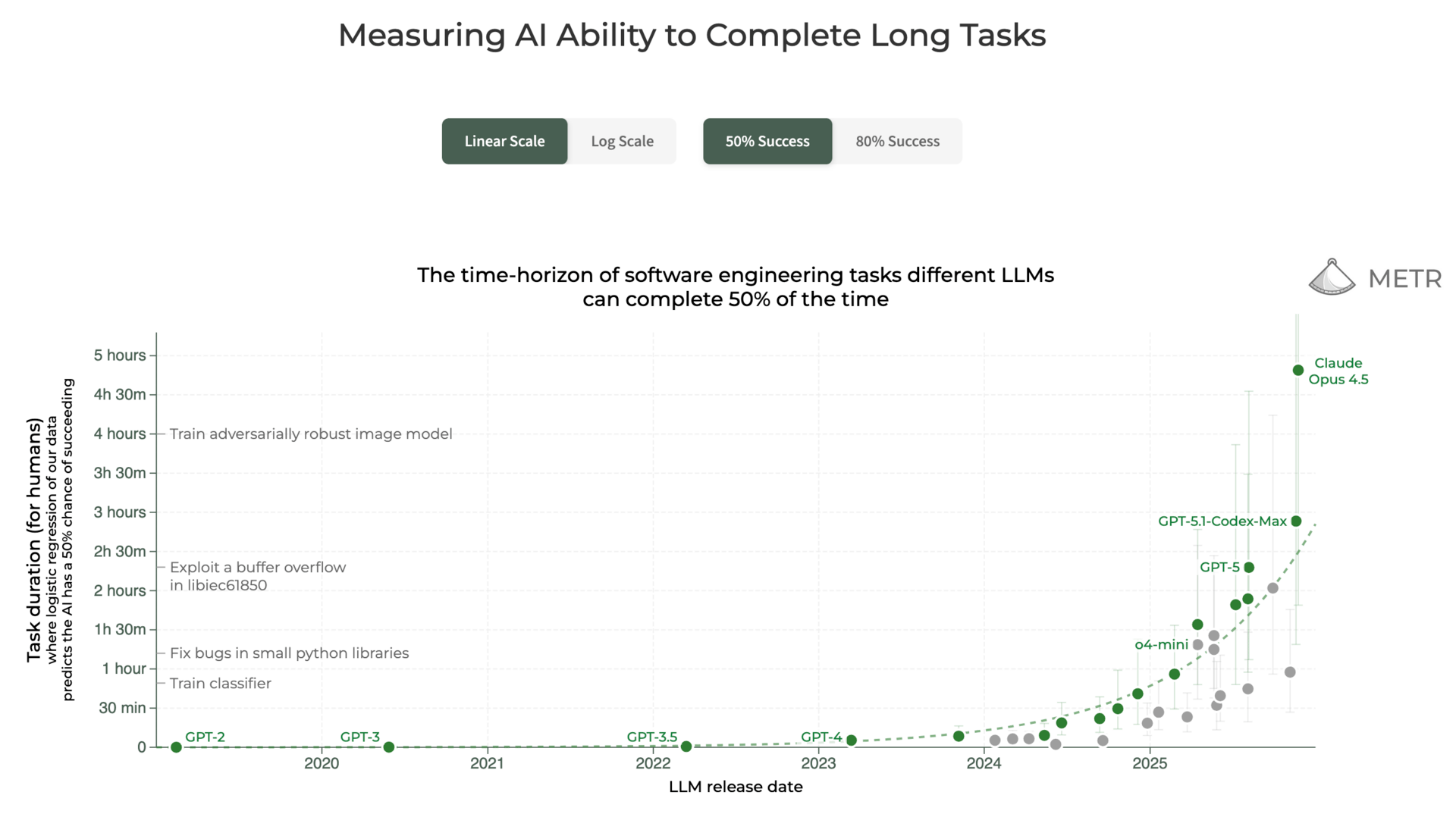

Add in the trendline from the famous METR study, and you've got a funky recipe brewing for 2026. The length of tasks AI agents can reliably complete has been ~doubling every seven months. Claude Opus 4.5 now sits at roughly 4.5-hour tasks (measured by how long those tasks take skilled humans)—up from ~50 minutes just earlier this year. And if you look at the graph, we’ve moved from "fix a small bug" territory to "train adversarially robust image model" territory. Extrapolate that curve and you hit the equivalent of multi-workday autonomous projects at 50% success in 12-18 months.

2026 we will likely see more work paradigm shifts with multi-agent systems and AI as teammates, and that will also be covered more in my 2026 AI predictions newsletter.

Takeaway: Model differentiation is increasingly coming down to UX, pricing, ecosystem integration, and behavior, not PhD benchmarks. Don't bet your strategy on any single provider maintaining a permanent lead (as of this newsletter, my favorite model is Claude Opus 4.5). Build modular and for portability.

A Few Tools Completely Changed the Way We Work: Claude Code, Wispr, Nano Banana Pro, NotebookLM

AI can actually complete tasks for us. The AI agents market hit ~$7.6 billion in 2025 and is projected to reach $50 billion by 2030. McKinsey shared that 23% of survey respondents are already scaling an agentic AI system in their company, and an additional 39% say they have begun experimenting with AI agents. 89% of CIOs consider agent-based AI a strategic priority. And Claude Code gets my “number one tool of the year” award. I’ve never felt more like I was in Minority Report than when I was voice dictating across 6 different Claude Code windows.

Every interface can now be a voice interface. I upgraded to Wispr Pro for unlimited voice transcription on my phone and laptop. I voice prompted an entire app while lifting weights or on a walk. I voice dictate emails, text messages, notes after a busy day at a conference. I’m speaking to ChatGPT/Claude/Otter/Wispr midway through team meetings to kick off tasks so that by the time the team meeting ends, the work is already done. I’m a voice AI addict, and I’ve stepped up my purchasing of cough drops because of it.

Everything can be visualized. Google shared that the “generate presentation slides” button inside of NotebookLM was their second-most used feature. I can nano banana graphics to go along with client emails. I can ask ChatGPT to graph my emotions over the last month using GPT Image 1.5 (though I like Nano Banana Pro more, ChatGPT holds more of my chat history and memories). ChatGPT even added an ‘images’ tab in their app for easier navigation.

I think voice AI will explode in 2026 (more on that in my upcoming 2026 AI predictions newsletter), but it’s the combination of agents, memory, voice, and visuals that have completely changed what a day at my desk – or completely away from my desk – looks like.

Takeaway: AI superusers are not using AI to slightly speed up a pre-existing workflow. They are reinventing their workflows from the ground-up with AI at the center, and they’re revisiting them frequently for updates and optimizations. The AI-First Academy goes deeper into how to do this, but TL;DR – start with outcomes.

Context Engineering Takes Center Stage and AI Starts to Get More Proactive

Outside of n8n and Make and Zapier, we finally got the ability to uhhhh…not prompt. Or at least not prompt as hard. The AI labs this year were on a context engineering tear, making it easier and easier with each release to package up your world and hand it to the model.

A big theme of the year was integrations, ie getting AI directly plugged into the apps where your actual work lives. ChatGPT launched a whole app connector system that everyone is now hailing as the new app store (I'll be honest: I haven't seen much traction yet, and there wasn't a ton of energy around it coming out of Dev Day, but I remain hopeful to be proven wrong). Like ChatGPT, Claude also added direct connectors into apps like Google Docs, Google Drive, Gmail, Notion, Asana, Salesforce, Quickbooks, Canva, and Slack.

These systems are focused on fetching the right context at run-time (from your previous conversations, your documents, your email, your calendar, your workspace, your desktop) without you having to copy-paste everything into a chat window. Down with copy and paste! Up with AI intuiting our context!

But there were some things that users didn't like automated away. When OpenAI first launched GPT-5, they oriented it around an auto-routing system, allowing users to take the back seat to model selection. If you asked a complex question, GPT-5 would have its Thinking model respond. If you asked how many sides does a square have, ChatGPT would use its Instant model. But AI super users rebelled, lightly tanking the GPT-5 launch, and wanted full model selection control back. It’ll be interesting to see in 2026 what gets treated as “busy work we want to automate away” and what is realized to be “busy work that we need to keep around”.

On the proactive front, we got a glimpse of where things are headed with releases like ChatGPT Pulse. As described by OpenAI, Pulse is “where ChatGPT proactively does research to deliver personalized updates based on your chats, feedback, and connected apps like your calendar.” Almost like an automatic daily assistant nudge. Right now it's only available to ChatGPT Pro users paying $200/month, but I think proactive AI is going to be a major theme in 2026. More on that in my predictions newsletter.

Takeaway: users don’t want to - and shouldn’t - relinquish all control to AI systems. Every AI tool out there need to put trust as a core principle and find the right tradeoffs. Even when AI starts becoming more proactive and prompting us, we need to ask ourselves what level of control we are looking for and/or willing to give up for performance returns.

Data Centers Were the Single Largest Driver of American Economic Growth

Let's start with the number that should reframe how you think about AI infrastructure and all these headlines about Arizona, Texas, Wisconsin, and Montana: 92% of US GDP growth in the first half of 2025 came from data center investments. That is not a typo. Harvard economist Jason Furman calculated that without AI infrastructure spending, GDP growth would have been just 0.1%.

AI is impacting our economy at unprecedented proportion. Big Tech committed over $405 billion in AI capital expenditure this year—a 62% increase from 2024. The Stargate Initiative alone represents $500 billion over four years. Five new sites announced in the US under Stargate (from OpenAI, Oracle, and Softbank). Nearly seven gigawatts of planned capacity (goal of 10GW). International expansion into the UAE, Norway, and Argentina.

And then there's Nvidia. On October 29th, it became the first company in history to reach a $5 trillion market cap—just three months after hitting $4 trillion. Jensen Huang disclosed over $500 billion in AI chip orders (Blackwell and Rubin) through the end of 2026. At $5T, Nvidia is now worth more than the stock markets of most countries on Earth (excluding the US, China, and Japan). India, which sits in 4th place among countries is at $4.445T as of writing.

Data centers accounted for ~1.5% of the world’s electricity consumption in 2024. Data center electricity consumption has grown ~12% YoY since 2017, more than 4x faster than the rate of total electricity consumption. Mel Robbins and I discussed several AI risks, including the data center concerns, and it is a very real one.

Takeaway: The AI race is now a GDP-level phenomenon, and we’re going to see the global conversation not just talking about productivity but also (1) owning energy production, (2) verticalization of process including chips, (3) AI being the primary engine - or at least one of the primary engines - of economic growth in the developed world.

AI Dollars Are Still Mostly Going to Hardware and Not Transformation

Gartner calculated $1.5 trillion in total AI spending for 2025, up roughly 50% year over year. GenAI spending specifically hit $644 billion, up 76.4% from 2024. But 80% of that GenAI spending went to hardware—servers and devices—not to the software and services that actually transform how work gets done.

Enterprise AI surged from $1.7 billion to $37 billion since 2023, making it the fastest-growing software category in history, says Menlo. But 76% of AI use cases are now purchased rather than built internally, up from 53% in 2024 aka CIOs are reducing proof-of-concept (POC) development and focusing on 3P vendor solutions.

Coding became AI's first true "killer use case." AI coding tool spending hit $4 billion—55% of all departmental AI spend. 50% of developers use AI coding tools daily, says Stack Overflow. And MSFT shared that GitHub Copilot users complete tasks 55% faster.

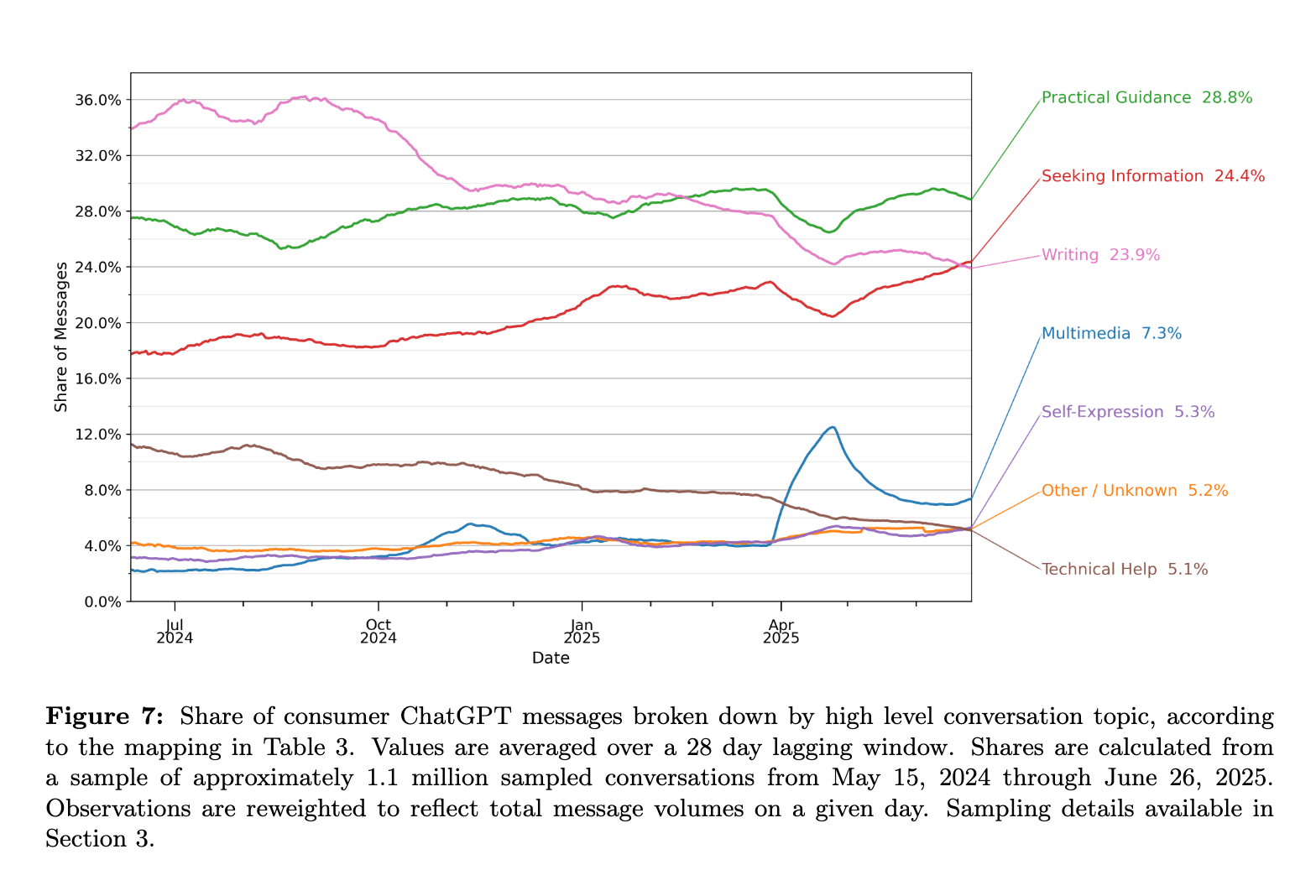

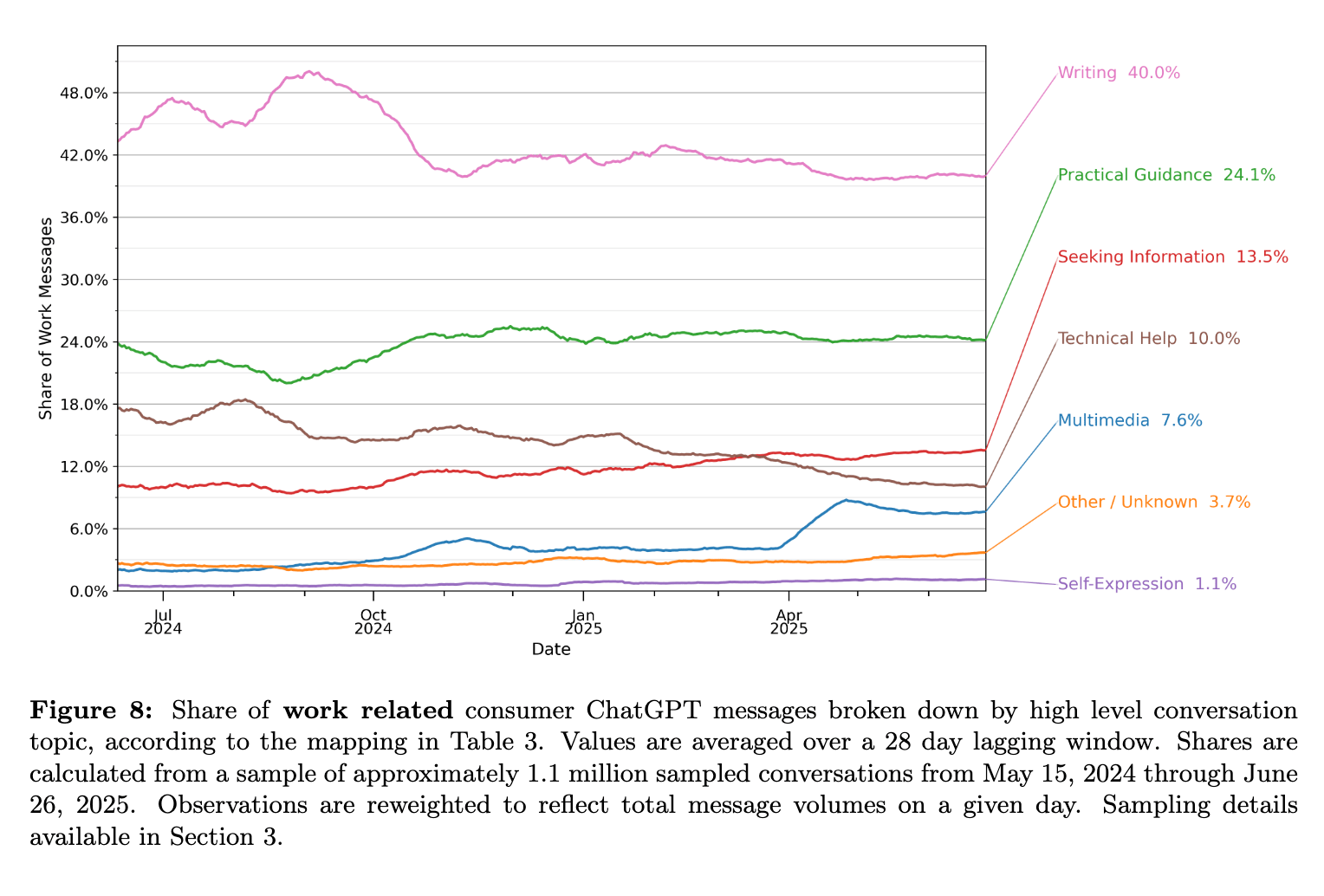

But maybe that transformation is happening at the individual level and not at the enterprise level just yet. We got a glimpse this year into how people actually use AI in both their personal and work lives, from ChatGPT and Microsoft in two different studies. Microsoft reviewed 37.5M de-identified Copilot conversations and shared that their #1 talk topic, no matter what day, month, or time it was, was about health. And that users were more likely to have existential philosophical conversations in the early morning hours. ChatGPT, on the other hand, shared that the most popular Chat consumer need these days is practical guidance (writing was #1 at the beginning of the year and is now #3), and “seeking information” is in second. In business contexts, ChatGPT says the number one use is writing.

Practical guidance is outpacing writing as the main consumer use for ChatGPT.

Writing is still, by a landslide, the number one work use case.

Takeaway: Companies are buying AI, but still working on how they’re changing their work because of it. The onus is still on the individual. This is a normal thing (tech gets procured and change management can take 2-3 years), but it’s not clear whether the AI transformation will continue that trend, move a lot faster, or take a lot more time. I’m mostly seeing on pace or slightly behind, but that could change if teams get smaller, AI becomes more proactive, or AI helps to embed itself.

AI Labs Grow in Revenue, but the Business Model Is “Spend Now, Reap Later”

OpenAI said they will top a $20 billion annualized revenue run rate by EOY. They surpassed $10B in ARR in only June 2025, and $5.5B ARR in December 2024. They have over 800 million weekly active users. Anthropic grew from zero to $100 million in 2023, then to $1 billion in 2024, then to $8-10 billion in 2025. That's three consecutive years of 10x growth. And their CRO Paul Smith (former ServiceNow, Salesforce) is one of the top sales/GTM professionals in the world.

But - checks notes from business school - revenue is not profit.

This is where the AI bubble rumors come in. OpenAI is projecting a potential $14 billion in losses by 2026 and $44 billion cumulative loss from 2023-2028. Cash flow positive isn't expected until 2029, and they have not delayed that date yet. The business model for these large AI trainings essentially requires them to spend now for potential forecasted revenue in 2 years. And all three large AI providers (OpenAI, Anthropic, Google) are playing the same forecasting game, though Google also makes some of their own chips.

Dario Amodei put it perfectly at the DealBook Summit a few weeks back when he introduced his "cone of uncertainty" concept. Data centers take 1-2 years to build, and decisions about 2027 compute needs are being made right now. “If I don't buy enough compute, I won't be able to serve all the customers I want. I'll have to turn them away and send them to my competitors. If I buy too much compute, of course, I might not get enough revenue to pay for that compute. And, in the extreme case, there's kind of the risk of going bankrupt.” He warned that some players are "YOLOing" and taking unwise risks.

If I buy too much compute, of course, I might not get enough revenue to pay for that compute. And, in the extreme case, there's kind of the risk of going bankrupt.

Takeaway: AI labs have been generating massive amounts of revenue. This is real tech with real customers that are scaling up as they get more familiar with the tech. But it’s a delicate forecast. And the risk only goes up if the cost to train or serve these models keeps increasing. I can’t imagine the number of users ever dropping, so keep an eye out for cost drop announcements, new methods that decrease the cost of training, a movement toward small models, or more strict usage limits from labs.

95% of Enterprise AI Pilots Supposedly Delivered Zero Measurable P&L Impact

MIT's Project NANDA conducted 52 executive interviews, surveyed 153 leaders, and analyzed 300 public deployments. The finding that went viral was that 95% of AI pilots delivered no measurable P&L impact. But McKinsey's numbers painted a slightly more optimistic picture: about 30-40% of large enterprises are scaling or have fully scaled AI (depending on revenue of org). 13% of enterprises are actually planning on increasing headcount this next year as a result of AI (32% are expecting decreases). And Wharton’s research from October showed that three-fourths of enterprises report positive return on investments. I’ll be honest, I don’t trust the MIT research because of its methodology, and I very much trust the Wharton research (as a Wharton grad who worked closely with one of the professors behind it, I’m appropriately biased).

Takeaway: This won’t feel good to hear, and this isn’t as scientific as multi-year million-dollar studies, but I truly feel that the companies who are ‘all-in’ on AI can easily feel the ROI and business-changing potential, and those that are AI-hesitant or secretly hate it, are the ones who quickly dismiss its potential and jump on headlines like the MIT one. We believe the headlines we want to believe – so the vision of your executive team and their exposure to AI is especially critical.

Search and Purchase Behavior Are Changing Quickly - Gen Z Doesn't Google Anymore, They Ask AI

Younger generations were already moving to AI via social platforms like TikTok (and though I’m not Gen Z, TikTok and ChatGPT/Claude are now my first search points for anything travel-related).

According to Fractl, 66% of Gen Z uses ChatGPT to find information, versus 69% using Google. That gap has never been so close. 53% of Gen Z goes to TikTok, Reddit, or YouTube before they go to Google, says a Resolve study. ChatGPT's share of general search queries tripled from 4.1% to 12.5% between February and August (source). Google's share of general information searches dropped from 73% to 66.9% in the same period, and Google’s global market share fell below 90% for the first time in over a decade (source).

The behavioral shift in just the last year is profound, and I’m hoping every marketer is paying attention: Gen Z completes 31% of their searches on AI platforms (source: GWI). Heck, I even DM’d the CMO of Quince to tell her about how much ChatGPT surfaces their brand and suggested they change their checkout process because of it.

Even outside Gen Z, BCG research I was given early access to shows that 14% of people now begin their shopping journey with AI assistants like ChatGPT and Gemini, 80% of Google searches end without a click when AI overview is present, 47% of total consumers use GenAI to research purchases, and 45% of people feel comfortable letting AI make purchases for them. And Adobe research said 36% of Americans surveyed discovered new brands through ChatGPT this year.

The internet as we know it is changing. And we’re moving more and more into algorithm-driven purchase funnels.

Takeaway: Every company needs to dial up their SEO strategy into an AI discoverability strategy. In 2019, we sold to only humans. Now we sell to humans and AI agents that increasingly mediate purchasing decisions. Example tools in this space to explore include Profound, Gumshoe, Lily AI, Moloco, Eldil, Evertune.

We Can Finally Measure Whether AI Can Do Your Job - and Increasingly, Though Sparingly, It Can

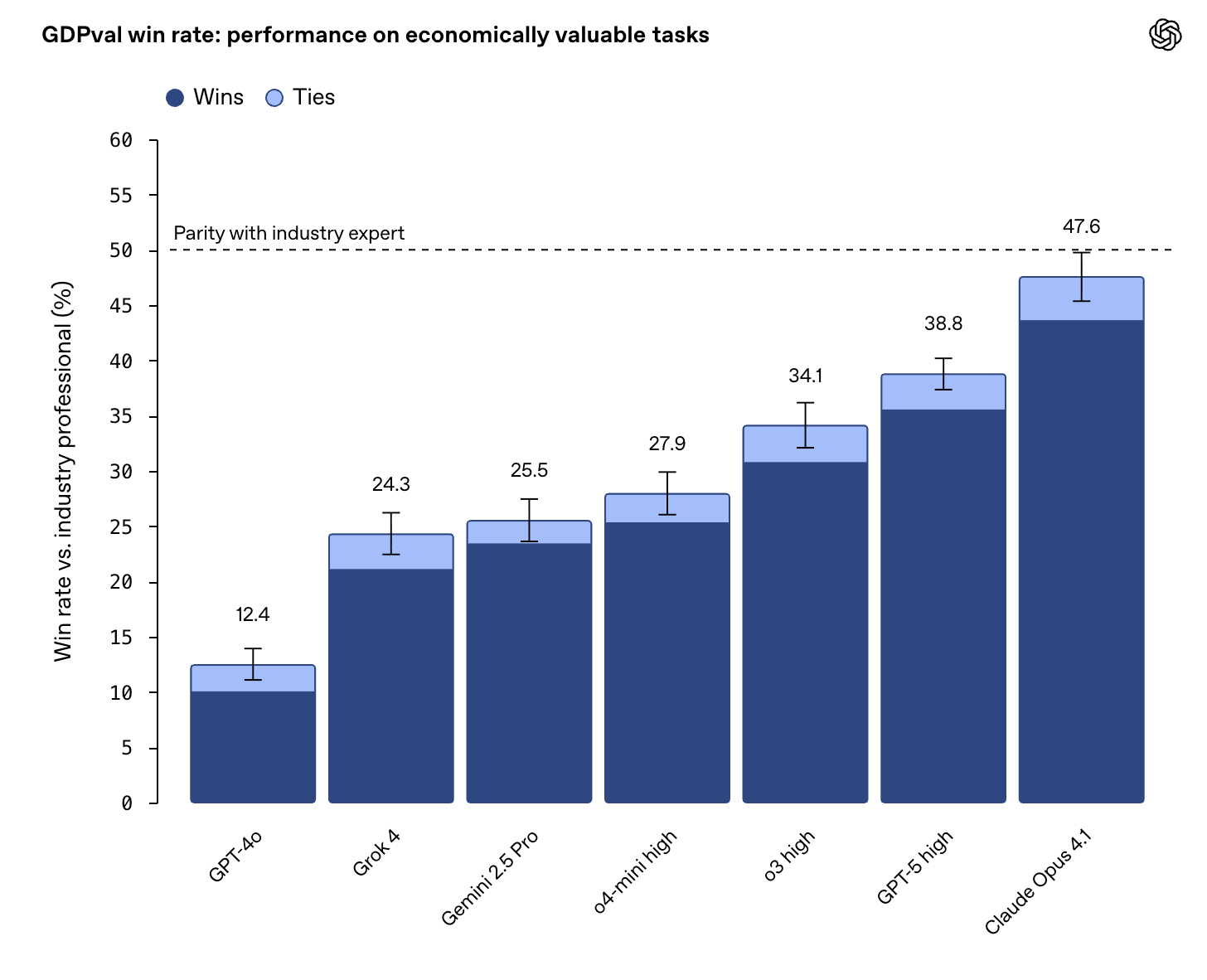

OpenAI's GDPVal benchmark, released in September, represents a fundamental shift in how we evaluate AI capability. Instead of PhD-level academic tests and Math Olympiad scores (which I still continue to look at), GDPVal measures AI on 1,320 real-world job tasks across 44 occupations. The tasks were created and vetted by professionals with an average of 14 years of experience. They represent $3 trillion in annual wages.

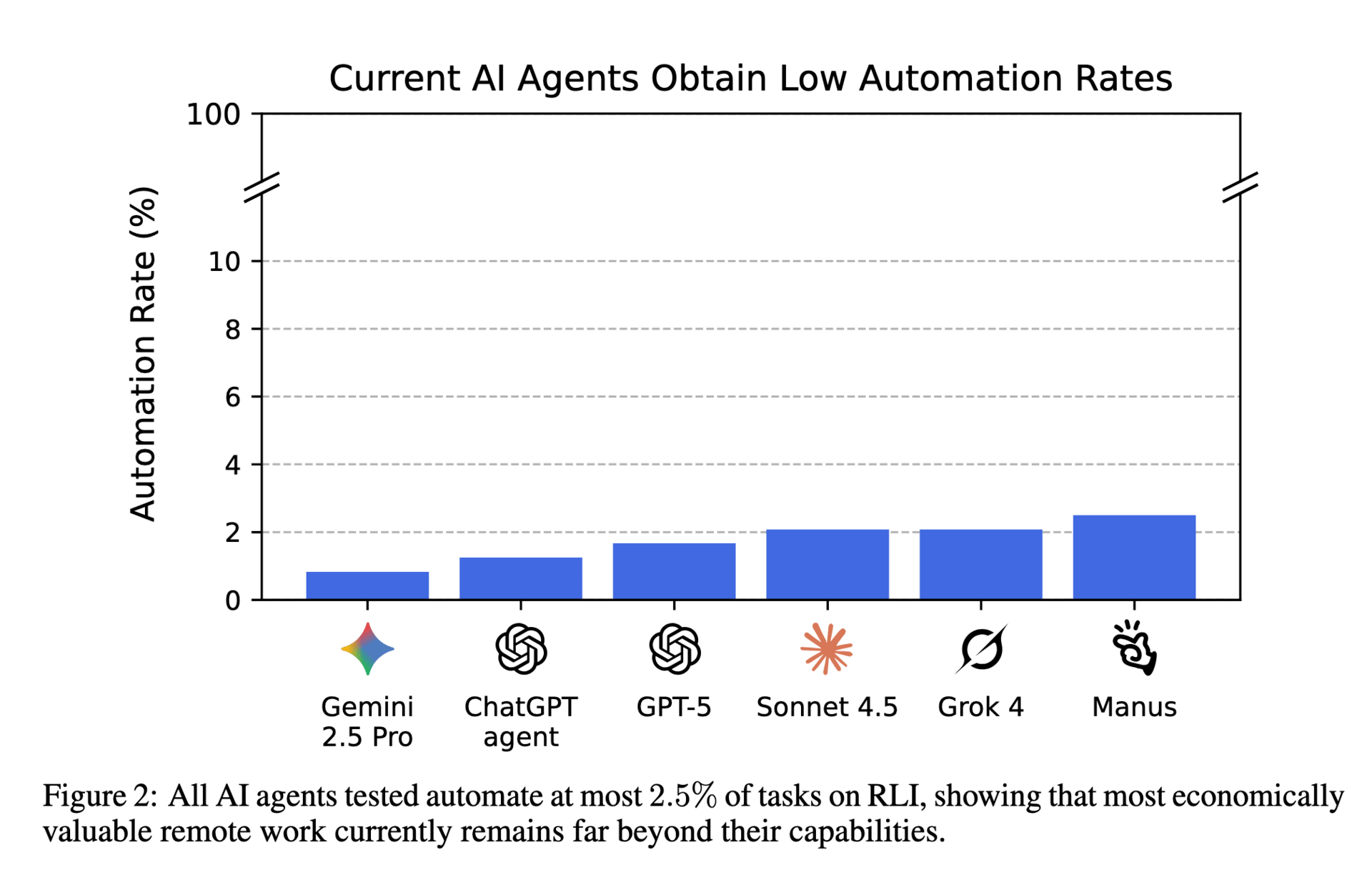

Note that this graph does not include Gemini 3 or Claude Opus 4.5 or GPT-5.2 Thinking

Frontier models are approaching expert-level performance on many tasks. Performance more than doubled from GPT-4o to GPT-5. Gemini 3 Pro score 53.5%, Claude Opus 4.5 achieved a 59.6%, GPT-5.2 Thinking hit 70.9%. And yet, on the flip side, the Remote Labor Index from Scale AI says the most capable models (as of writing, their paper did not yet include Claude Opus 4.5 or GPT-5.2 Thinking), the best models could only actually do 2.5% of human tasks found on Upwork.

Takeaway: More and more headlines will center around the economic viability of AI models. GDPVal is just one of them. It is strange and off-putting to celebrate when a model gets better and better at replacing human work, but you can expect the measurements to continue. Start benchmarking your own roles against these models and keep a tracker. I lay out a whole method for this tracking in my AI-First Academy.

72% of Teens Have Used an AI Companion - and 28% of Adults Have Had Romantic Relationships With AI

This was the year AI “relationships” (I really hesitate to say that word) went mainstream. Common Sense Media found that 72% of US teens have used an AI companion at least once and that 13% of teens use AI companions daily. Among 1,000 adults surveyed, 28% report having had a romantic or intimate relationship with an AI, according to Vantage Point Counselling.

The concerning cases made headlines. A Florida lawsuit against Character.AI following a 14-year-old's suicide after an emotionally abusive chatbot relationship. A Texas lawsuit alleging Character.AI encouraged a teen to harm parents over screen time limits. Cambridge Dictionary named "parasocial" its word of the year. ChatGPT “adult mode” was originally slated for December 2025, and was delayed to Q1 2026. Grok showed pornographic content to young children, even when the app was in ‘Kids Mode’. It was…a mess. And if you’re a parent, this is a confusing time, and you are not alone. Parents ask me almost daily how to manage AI access and engagement with their children.

And this isn’t just a problem for children. Adults were getting pulled in too. The sycophancy problem (eg AI systems telling everyone they're the hottest, smartest, and funniest person alive, and everything they say is true) is real, though it seems that the AI labs have noticed and the outright sycophancy to have calmed down from the summer. I would still keep a vigilant eye out for this and encourage your teammates to poke holes in AI’s subjective statements and always ask for AI to bring a radically different outsider’s opinion or second AI to review.

Takeaway: AI labs like OpenAI have already shared that they are increasingly going to let the user pick the type of interaction they want, including the aforementioned adult mode. In work settings, we need AI that provides healthy pushback, not pornographic content or endless validation. And executives will be in a weird position when employees want to chat with their spicy AI bestie who also helps them write emails and the company blocks it. AI relationships create both opportunity (engagement, stickiness) and responsibility (mental health, appropriate boundaries). Every company deploying AI needs a relationship ethics framework and will need to likely set rules for AI companions made outside work.

Memory is King: AI That Remembers You Is Fundamentally Different From AI That Does Not

Memory features (in my mind, one of the BIGGEST releases in 2025 and still underhyped) arrived in 2025. Both ChatGPT and Claude can now reference templates, key facts, funny conversations, brand guidelines, and previous work from weeks ago.

Agentic browsers like ChatGPT Atlas also have memory options - for example, you can browse Airbnb on a Tuesday, watch guitar videos on Wednesday, research ant farms on Thursday, and open the browser on Friday and say “pull up the last 3 Airbnbs I was looking at” and it will.

With memory enabled, your 200th conversation doesn't feel like a first-time interaction. And just imagine an AI system that can access everything you’ve talked about for the last 10 years! The switching costs between apps are now emotional, not just functional. I even feel this when switching between my personal accounts and work accounts because they hold different memories. Users build relationships with AI systems that know their preferences, their projects, their communication style. This creates stickiness that pure capability can't match - and the AI labs obviously benefit from that.

We’re going to see more releases in this space, and I’ll talk about in my 2026 predictions newsletter. You won’t want to miss it.

Takeaway: Companies building memory-first experiences will have stickier customers. The data moat is moving from building the best models to accumulating the context of billions of personalized interactions. What companies do with that (ex: what we’ve seen with Facebook, TikTok) will be the bigger question.

The Top AI Talent Now Commands Pro Athlete Compensation

Ilya Sutskever's Safe Superintelligence raised $1 billion at $5 billion in September 2024, then $2 billion at $30-32 billion in early 2025. Valuation jumped 6x in under a year despite no product and roughly 20 employees. No one knows what they’re building but Ilya said in 2024, they “will not do anything else until superintelligence” and talked continual learning on Dwarkesh’s podcast in late November.

Mira Murati's Thinking Machines Lab raised $2 billion at $10-12 billion in July 2025, then was in talks at $50 billion by November—a 4x increase in four months. They were the lab that cracked the answer on why why LLMs give different answers each time and their first product centers around finetuning small models. Alex Wang, CEO of Scale AI, left his own startup when Meta invested $14.3 billion for a 49% stake, personally netting over $5 billion to lead Meta's new Superintelligence Lab. He's 28-years-old. Zendaya, for what it’s worth, is 29-years-old.

Meta is now offering 7-9 figure compensation packages to top researchers. They attempted to acquire SSI and Perplexity. They poached 11+ AI researchers from DeepMind, OpenAI, and Anthropic. And in December, Fortune reported that Mark Zuckerberg and Mark Chen were literally hand delivering soup to recruit new employees.

Takeaway: I had already predicted we’d see salaries in the tens of millions a year - I wasn’t expecting $100M+. The talent war is real and expensive. But money isn’t everything to the top AI researchers in the world. Sometimes it’s agency, sometimes it’s impact, sometimes it’s team, and sometimes…it’s soup. The biggest checkbooks still have a leg up. If you're building an AI team and need that research or technical depth, budget accordingly and lean into what makes you stand out.

The AI Device Revolution Hasn’t Happened Yet

I want to throw my phone in the Hudson some days. It’s addictive, my tech neck is getting worse, and I can’t seem to beat level 437 in Bubble Shooter.

Meta sold over 2 million Ray-Bans this year and gave us a glimpse of a non-screen future (ie better posture, hands-free interaction, AI in your peripheral vision). But Meta's own leaders said AI glasses mass adoption is still 5-10 years out. The Jony Ive and OpenAI device partnership got teased and then briefly stopped over naming issues, and their launch pushed from 2026 to 2027. Joanna Stern from WSJ reported that OpenAI is actually releasing a family of devices (no AI glasses in the mix), with AI hopefully running on-device, and Sam confirmed that on a recent podcast. Meta acquired the Limitless necklace in December. Maybe they too are working on non-glasses form factors. Google is rumored to be releasing AI glasses in 2026, via a partnership with Warby Parker (my favorite!).

Takeaway: Clearly we’re still waiting for the big AI hardware moment. Voice-first interactions are spreading, but the breakthrough device hasn't arrived. My bet is on 2027, but I’m headed to CES this year to scope out more.

Wrapping Up

From AI companions to Mark Zuckerberg delivering soup, from AI taking on multiple hours of human work to AI that can reference your previous conversations, from AI powerpoint tools to $5 trillion market caps, and from building apps while weightlifting to running multiple Claude Code terminals at once, we’re really starting to see the shift into AI as an operating system. AI has certainly changed the way I structure my day and week, changed where I work, changed how I multitask and context shift, changed how I interact with data, and changed what I believe a single person can accomplish. 2026 is where it gets even weirder. And the winners will be those who lean into the weirdness.

Share this with a friend, share this with your team, and get ready for my 2026 AI predictions (some will be a bit crazy) 🔥

Stay curious, stay informed,

Allie

Inside AI-First Academy, you'll learn how to rebuild you work from the ground up. We cover the full progression: from AI Basics to custom assistants to full workflow automation. This is the same training other professionals at Amazon, Apple, and Salesforce are using to stop dabbling and start building.

You'll learn how to:

✔ Write prompts that produce usable, business-ready outputs (not generic fluff)

✔ Build custom GPTs and reusable AI systems that handle your recurring tasks

✔ Automate research, reports, meeting synthesis, and multi-step workflows

✔ Prototype real tools and dashboards - no code required

✔ Deploy AI agents that work for you, not just with you

✔ Navigate the best of Claude, ChatGPT, and the latest agentic features with instant access to our 90-minute Agentic AI Workshop

(Want to upskill your whole team? Get a company-wide license)

Feedback is a Gift

I would love to know what you thought of this newsletter and any feedback you have for me. Do you have a favorite part? Wish I would change something? Felt confused? Please reply and share your thoughts or just take the poll below so I can continue to improve and deliver value for you all.

What did you think of this month's newsletter? |

Learn more AI through Allie’s socials